From being a website to store and watch videos online, YouTube has become a large, unique platform with almost no competitors that can compare.

However, according to one of the developers who created this algorithm, the nature of this algorithm is not really that great.

YouTube recommendations are a waste of time

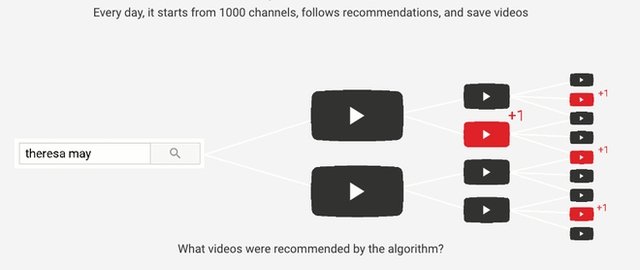

YouTube suggestions appear in the “Up Next” list on the right side of the screen and they will be automatically played when we turn on the automatic viewing feature.

However, according to Guillaume Chaslot, a developer who worked with Google on YouTube’s recommendation algorithm, this is not entirely true.

“It’s not terrible that YouTube uses AI to recommend videos to you, because if the AI is fine-tuned, it can help you get what you want.

As Chaslot explains, the measure of the algorithm’s success is watch time.

In his speech at the DisinfoLab Conference last month, Chaslot emphasized that divisive and sensitive content is often widely recommended – topics related to conspiracy theories, fake news, etc.

Even if Google disagrees with Chaslot’s statement, Mark Zuckerberg himself admitted last year that these types of marginal content will have more engagement.

“We recognize that YouTube’s recommendations are toxic and that they go against civic discussion.”

Harmful suggestions

Essentially, the more outlandish content you make, the more people want to see it, and the more it will continue to be recommended by the algorithm – resulting in greater revenue for the creator.

The basic structure of YouTube’s recommendation algorithm was previously refined for its core content types – like cat videos, video games and music.

When the Mueller report was released, detailing whether there was any collusion between Russia and the election of president Donald Trump, Chaslot found that the suggestion from most channels was a video

The irony is that if Chaslot is right, YouTube’s algorithm is amplifying a Russian video to explain whether Russia was involved in the campaign or not.

What is the solution for you?

For Chaslot, the YouTube algorithm’s recommendations are broken.

Essentially, the tool finds out which videos are being shared by most channels to give you an overview that you can’t get using individual recommendations.

Google of course disagrees with AlgoTransparency’s method and says it does not accurately reflect the way YouTube’s recommendation algorithm works – which relies on surveys, likes, dislikes and shares.

Chaslot’s solution isn’t the only way to get a clear view of the differences in YouTube’s recommendation algorithm.

“The best short-term solution is simply to remove the suggestion function.

Refer to The Next Web